Learning how to program is like learning how to speak another language. It can be extremely frustrating at first, as one has to learn how to do even the most basic things like saying hello. Under the instructions of the Programming Historian, that is exactly what we learned to do first: saying hello.

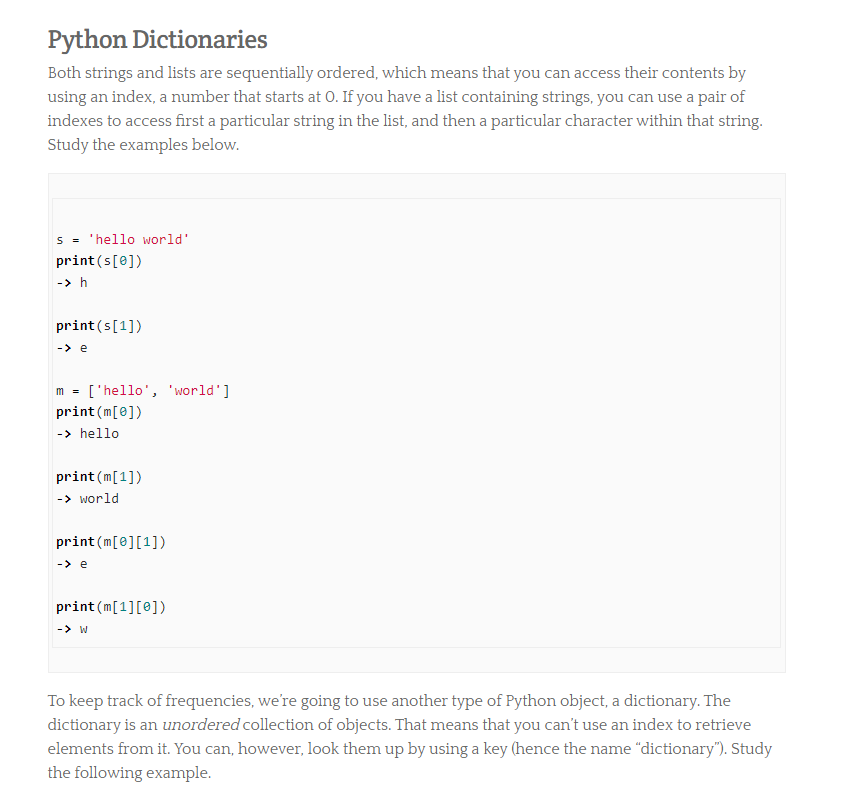

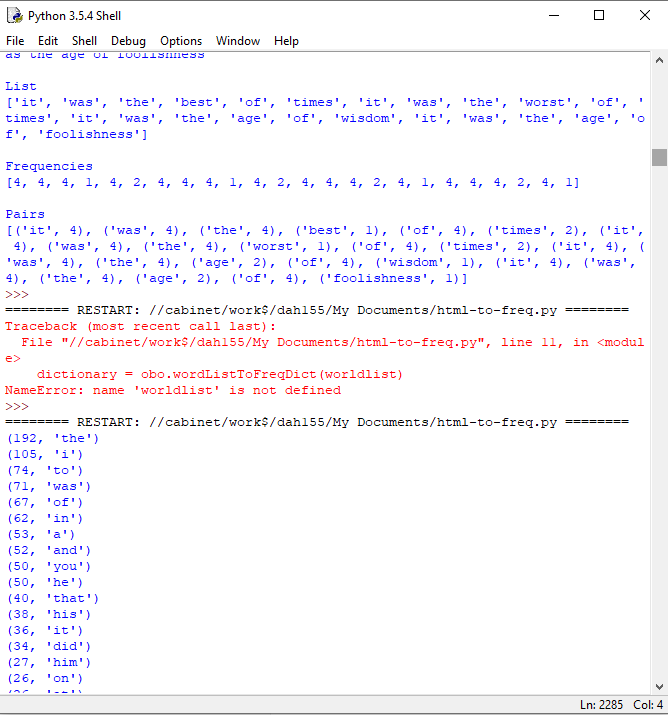

Having finished a first-year introductory computer science class, many of the tasks and concepts were familiar to me, although these new concepts seemed to be presented in a cursory manner. Here is a pic of the explanation of a dictionary :

For reference, my introductory computer science class spent well over a week going over the concept of a dictionary and there still were students that struggled. Having a single page of explanation of what a dictionary is to history majors that think of the snake rather than the programming language when they hear “python” is insufficient and can turn people away from programming. I think many history majors will find the rapid pace of concepts being thrown at them overwhelming, especially for the more difficult (at least for students with no background in computer science) concepts.

While I understand the desire to be succinct, the overwhelming pace may do more harm by deterring potential programming historians away from coding. Just like learning a new language, especially at the beginning, there must be efforts made to ensure the user does not feel lost.

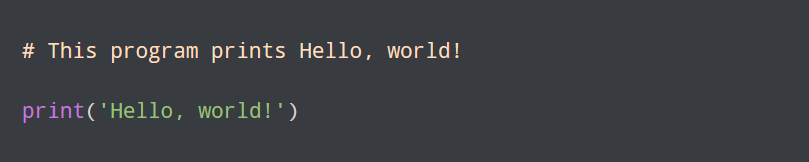

On a positive note, it was an excellent choice to present python as the first programming language for historians. Python has a clear and simple syntax, making the code somewhat readable even if one is not adept at programming. Look at this code to print “Hello world” from python:

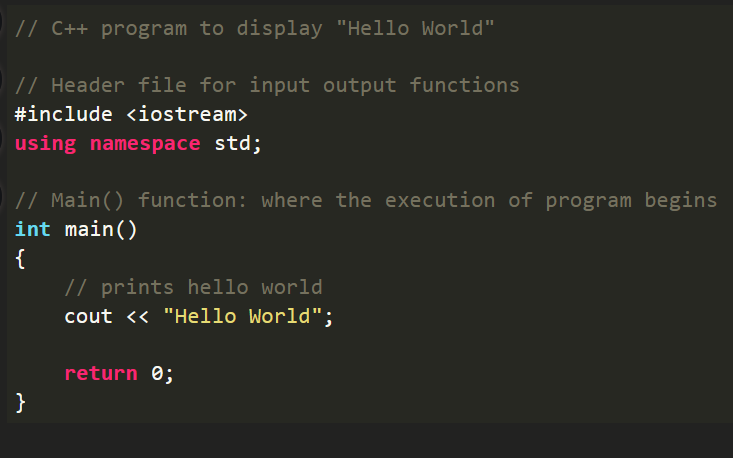

Compare that to the code to print “Hello World” from C++ (another programming software):

It is obvious which one is easier to read, and which one is also easier to initially learn.

While I appreciate that the lesson provides information on the most relevant programming functions that historians will use such as how to download webpages and working with HTML, I think this also means that historians won’t be exposed to all the foundational knowledge. I don’t think this is necessarily a problem, as while in an ideal world a historian would be well versed in all the fundamental computer science knowledge, in the real world with finite time and deadlines, teaching a select number of applicable computer science skills is very efficient.

I think the lesson should further emphasize the importance of proper syntax: it probably is the most common source of error and frustration to beginner programmers. Having inputted a single wrong character or extra space can mess up the entire program, which seemed to be a common point of confusion among my classmates. I personally got a syntax error when I accidentally wrote “worldlist” instead of “wordlist”, which resulted in this error:

With the proper emphasis on syntax, many beginner programmers will be more diligent while writing their code, resulting in less errors and thus fewer hairs pulled out.

Lastly, while learning programming may be incredibly boring or confusing to many history majors, I think it is something that everyone must be exposed to. Even gathering the most basic knowledge of a function or a string can go a long way because these concepts won’t be completely foreign. Just like how even if one does not know advanced mathematics, one ought to be taught the basic addition, subtraction, division, etc… because at least having the basic knowledge will allow one to have at least somewhat functional conversations with other regarding that topic. Overall I thought the programming experience is necessary, even if it may not be everyone’s favorite. It may even have the additional benefit of making students be glad they chose a history major over a comp sci major, making them not regret taking history.