Aligning assessment and experiential learning

I didn’t know what to expect as I rode the elevator up the Arts tower to interview for a research assistant position for a SOTL group. I certainly didn’t expect the wave of information and Dr. McBeth’s joyful energy. She, Harold Bull, and Sandy Bonny explained the project in a unique dialect; a mix of English and their shared academic speak. I hope they didn’t catch onto my confusion when they were throwing around the term MCQ, or multiple choice question, (which refers to the Medical Council exam in my former profession). I realized that I had quite a lot to learn if I was going to succeed in this position. I’d need to learn their language.

The scholarship of teaching and learning cluster working group shared the project through a concept map that linked “Assessment” to different kinds of students, subjects, and teaching strategies. The concept map itself was overwhelming at first but organizing information is in my skill set so creating an index was a straightforward matter. Connecting that index to resources with EndNote was quite a different affair. I closed the program angrily after multiple failed attempts to format the citations in the way I wanted. I had listened carefully and taken notes with Dr. McBeth, but the gap between theory and practice was large. With some perseverance, I am now able to bend the program to my will. It is a useful tool but like a large table saw or pliers used to pull teeth, it still frightens me.

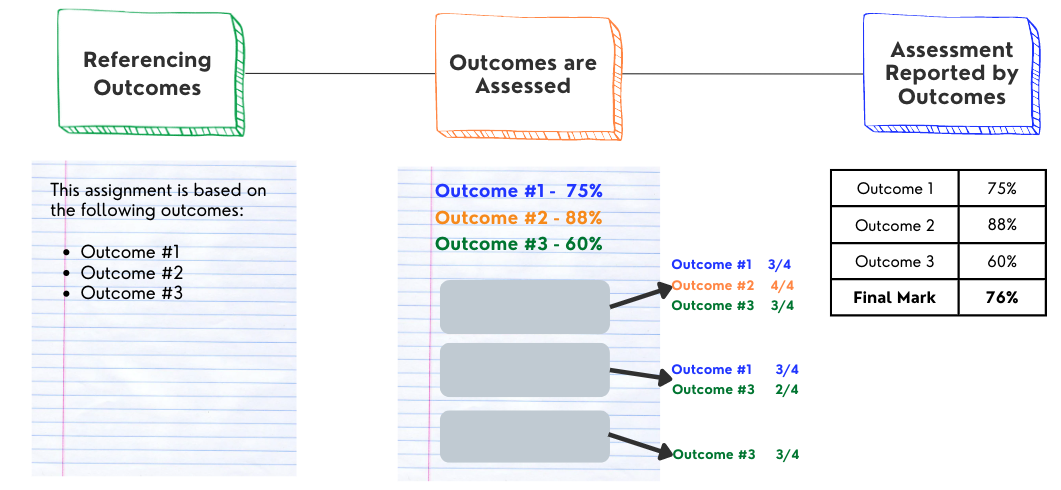

Working with the SOTL concept map, I had identified the three areas, and their sub-topics, which the group is most interested in exploring:

- Examination/Assessment

- Ease of grading

- After experiential learning

- Multiple choice questions (MCQ)

- Type of Experience

- Designed

- Emergent

- Prompted reflection and relativistic reasoning

- Subject Permeability

- Alignment to common knowledge

- Access to affordances for self-teaching and tangential learning

Well I might as well move my things to the library and stay there until May. These topics cover a huge swath of pedagogical research. As I began reading, though, I soon saw that there were emerging patterns and overlaps among topics. Designed experiences overlapped with assessments. Multiple choice questions and cognition intersected. It was clear that while my index was neatly laid out in discreet cells in Microsoft Excel, the reality of the discourse was a lot more fluid and messier; more accurately reflected in the hand-written topic names, lines, and stickers of the concept map.

An interesting thing I discovered was that although I struggled at times in my methodology class in Term 1, the information and skills I learned there were useful in evaluating sources. I can ask questions and identify gaps where methodological choices aren’t outlined clearly. To be able to use my skills in a practical manner immediately after acquiring them is very exciting.

“…student views and assumptions about experiential learning and peer assessment may not align with data on actual learning.”

Currently I am focused on the topic of Examination/Assessment, which has the broadest scope of all topics identified. Two articles about student perception of experiential learning and peer assessment were intriguing to me. They make clear that student views and assumptions about experiential learning and peer assessment may not align with data on actual learning. This resonates with all the learning I’ve been doing about subjectivity/objectivity and research ethics. Our perceptions and assumptions can be very powerful but they shouldn’t be taken as dominant knowledge without further inquiry.

Some authors make strong claims about their findings even though the description of their methodological processes is lacking. Little, J. L., Bjork, E. L., Bjork, R. A., & Angello, G. (2012) assert that their findings “vindicate multiple-choice tests, at least of charges regarding their use as practice tests” (p. 1342). I am hesitant to completely agree with their claim based on this article alone because certain methodological details aren’t addressed, such as participants’ demographics and level of study. They also changed variables (feedback and timing for answering questions – those without feedback got more time to answer) in Experiment 2 and used a pool of participants from another area of the county (United States). The work of Gilbert, B. L., Banks, J., Houser, J. H. W., Rhodes, S. J., & Lees, N. D. (2014) is also lacking discussion of certain design choices such as appending their interview and questionnaire items and explicating the level of supervision and mentorship (and any variation thereof) that different students received in different settings. This doesn’t necessarily mean that the authors didn’t make very careful and thoughtful choices, but that either the description of their research needs to be amended or further study is necessary before making definitive claims.

Conversely, the work of VanShenkhof, M., Houseworth, M., McCord, M., & Lannin, J. (2018) on peer evaluation and Wilson, J. R., Yates, T. T., & Purton, K. (2018) on student perception of experiential learning assessment were both very detailed in their description of their research design and the methodological choices made.

I wonder if the variability in data presentation is reflective of the varying skills of researchers as writers. Perhaps it is more reflective of the struggle of researchers to move toward an evidence-based practice in the scholarship of teaching and learning. Maybe it is both.

While I will not be creating a nest in the library and making it my primary residence, there is still a lot to read, learn, and uncover. I look forward to journeying together with you.

Summary

· Sources must be carefully evaluated to ensure quality of research design and findings.· Delayed elaborate feedback produced a “small but significant improvement in learning in medical students” [Levant, B., Zuckert, W., & Peolo, A., (2018) p. 1002].

· Well-designed multiple-choice practice tests with detailed feedback may facilitate recall of information pertaining to incorrect alternatives, as well as correct answers [Little, J. L., Bjork, E. L., Bjork, R. A., & Angello, G. (2012)]

VanShenkhof, M., Houseworth, M., McCord, M., & Lannin, J. (2018) have created an initial perception of peer assessment (PPA) tool for researchers who are interested in studying peer assessment in experiential learning courses. They found that positive and rich peer assessment likely occurs in certain situations:

- With heterogeneous groups

- In a positive learning culture (created within groups and by the instructor)

- Clear instructions and peer assessment methodology

Wilson, J. R., Yates, T. T., & Purton, K. (2018) found:

- “Student understanding is not necessarily aligned with student engagement, depending on choice of assessment” – Journaling seemed best at demonstrating understanding but had a low engagement score by students, (p. 15).

- Students seemed to prefer collaborative assessments – seen as having more learning value in addition to being more engaging, (p. 14).

- This pilot indicates that student discomfort doesn’t necessarily have a negative impact on learning, (pp. 14-15)

This is the first in a series of blog posts by Lindsay Tarnowetzki. Their research assistantship is funded by and reports to the Scholarship of Teaching & Learning Aligning assessment and experiential learning cluster at USask.

This is the first in a series of blog posts by Lindsay Tarnowetzki. Their research assistantship is funded by and reports to the Scholarship of Teaching & Learning Aligning assessment and experiential learning cluster at USask.

Lindsay Tarnowetzki is a PhD student in the College of Education. They completed their Master’s degree at Concordia University in Communication (Media) Studies and Undergraduate degree in English at the University of Saskatchewan. They worked at the Clinical Learning Resource Centre at the University of Saskatchewan for three years as a Simulated Patient Educator. They are interested in narrative and as it relates to social power structures. Lindsay shares a home with their brother and one spoiled cat named Peachy Keen.