by Virginia Wilson

Director, Centre for Evidence Based Library and Information Practice

University of Saskatchewan

On May 15, 2018, Brain-Work featured a post entitled Future of Brain-Work. After 4 years of publishing the blog, we (the Brain-Work advisory committee*) wanted to know what our readers thought and whether or not we should make some substantial changes. Readers were encouraged to take the short Brain-Work survey and many thanks to everyone who did!

From the stark first question (Should the Brain-Work blog continue to publish?) to the open-ended “Is there anything else we need to know?” you provided us with useful and thoughtful responses that have assisted the blog advisory group in moving forward with Brain-Work. Here’s an overview of what the survey told us:

• Should the Brain-Work blog continue to publish? Overwhelmingly, yes, which is a very gratifying response. 91% of respondents said yes, 4.3% said no, and 4.3% said yes, but maybe the format should change.

• Brain-work currently publishes every Tuesday. How frequently do you want to read posts on the blog? 47% of respondents said weekly, 15% said every other week, 19% said monthly, and 19% weren’t fussy.

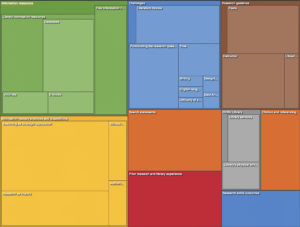

• The scope of the blog is broad: research, EBLIP, and librarianship. What specific topic(s) are most interesting to you? After a quick qualitative analysis of the responses, a majority of the respondents read the blog for research: practical takes, research in practice, integrating research work in with library work. The next most popular answer was all – all the topics represented by Brain-Work. Responses were a rather maddening mix of variety and research focus, leaving it difficult to decide if we should take the plunge and become a specifically librarians-as-researchers focused blog. As one participant noted, “There are so many librarian blogs about librarianship as practice. The research angle is unique to Brain-Work.”

• Based on the answers to the question “What kinds of blog posts do you prefer to read?” Brain-Work readers like short, timely articles containing practical, how-to information. Many respondents expressed an interest in research summaries as well.

• The idea of peer-reviewing the blog posts came up so without thinking too much about what that would look like, we asked our readers. 57% said no, Brain-Work does not need to publish peer-reviewed blog posts. 26% said yes, and 17% declined to answer that question. That’s somewhat of a relief, to be honest!

At the end of the survey, we asked if there was anything else we should know. Many of you said lovely things and commended the blog authors for all the work they put into their posts. The diversity of perspectives was also appreciated. And finally, our buggy comments function came to the fore. Believe me, it’s as frustrating for us as it is for you. Sometimes the comment form works and sometimes it doesn’t and the commenter receives a “you are forbidden to comment” error message. Hardly friendly and welcoming! This is a known issue that has no solution that we can find. Our workaround is to have commenters email their comments to me and I will post them on their behalf, providing I’m not forbidden to comment, too, on that particular day. Another way to interact with the blog posts is to comment on Twitter. Both @CEBLIP and @VirginiaPrimary promote the blog posts when they are published and a conversation on Twitter would be welcome.

Because the editor’s assignment has changed (my assignment – I’m the new liaison librarian for the College of Agriculture and Bioresources and the School of Environment and Sustainability at the U of S), we’ve scaled publishing back to every second week. The focus will remain the same (EBLIP, research, or librarianship). The comment function continues to be spotty, but we are contemplating a change of platforms. That won’t happen until next year now.

So, thank you, dear Readers, for weighing in on Brain-Work. Without the readers and the authors, this blog would not be heading into its 5th year of publication. Thank you for your continued support and hopefully your continued enjoyment.

*The Brain-Work advisory committee consists of DeDe Dawson, Shannon Lucky, and Virginia Wilson.

(Editor’s note: Brain-Work is hosted by the University of Saskatchewan and there is a problem with the comments that cannot be resolved. If you try to comment on this or any blog post and you get a “forbidden to comment” error message, please send your comment to virginia.wilson@usask.ca and I will post the comment on your behalf and alert the author. We apologize for this annoying problem.)