By Tasha Maddison, Saskatchewan Polytechnic

Transitioning library instruction into an online platform allows for increased flexibility for content provision, as well as further opportunities for assessment; provided that the learners access and fully engage with the content. Saskatchewan Polytechnic has a new online library module for faculty intended to complement face-to-face information literacy sessions. The course covers the entire research process; from information need, to writing and formatting academic papers. Opportunities to assess the students’ learning are built into each learning outcome through discussion posts and quizzes.

The key to a successful blended learning project is the “purposefully integrated assessment, both formative and summative, into the actual design of the course” (Arora, Evans, Gardener, Gulbrandsen & Riley, 2015, p. 249), thus evaluating student learning by incorporating quizzes and discussions. Their goal was to “create an online community of active and engaged learners”, and through instructor prompts, “students were directed to state what they found valuable in the peers’ response, what questions they had … and also what support they would add to make their peers’ argument stronger” (Arora et al., 2015, p. 239). The researchers noted the assessment activities “layered the learning experience – helped to generate and sustain student interest and enthusiasm for the course material to fashion a vibrant community of active learners in the process of meaning- and knowledge-making” (p. 241). Perhaps their most compelling statement is that the “students saw the online classroom as an active learning space, not just a repository for materials or a place to take quizzes” (p. 248).

Students in our online course were informally evaluated on their responses to three discussion posts. Being present and available to the online audience is vital for both student success and their corresponding engagement with the learning materials. Students are more likely to participate in a conversation if they feel that there is someone reviewing their posts and who is prepared to respond when necessary or appropriate. Discussion posts were scheduled at the mid-way point of each learning outcome so that students could reflect on the information that was just covered, as well as provide a foundation to the materials that were included later in the outcome. A librarian reviewed all content and responded to most of the discussion posts, providing feedback and suggestions. Anecdotally, responses were thoughtful and thorough, demonstrating a high level of comprehension and a strong connection with the content.

Participation in discussion posts allows students to synthesise their thoughts about a particular topic and more importantly, to learn from their peer group. Students can review the sentiments shared by their colleagues and learn from their experiences. According to Arora et al. (2015) discussion posts, help students to develop individual skills such as “critical reading and analysis through close reading and textual analysis” (p. 243) and builds community by “encouraging students to work together on their interpretive skills and in negotiating knowledge” (p. 244). This form of assessment is meaningful to the instructor as well, as they can learn about the student and their understanding of the materials.

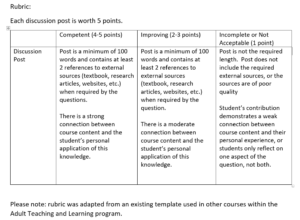

This course was piloted in July 2018. Discussions were included in the pilot, but were not formally assessed at the time. The development of a rubric was designed to evaluate the student’s output for the next iteration of the course this spring. A rubric will assist students in determining how they are doing in the course and identify any growth areas.

In order to perfect formative assessment of online library instruction, librarians should include a variety of measurement instruments that fully address student learning. As recognized in the work of Lockhart (2015) “it is very important that the academic programme continually tests and builds the information literacy skills of students” (p. 20), which can be accomplished by developing strong partnerships with instructors so that the librarians can adequately analyse student learning and success.

The module discussed here was developed by Diane Zerr and Tasha Maddison, librarians at Saskatchewan Polytechnic, and is used by students in the Adult Teaching and Learning program.

References

Arora, A., Evans, S., Gardner, C., Gulbrandsen, K., & Riley, J. E. (2015). Strategies for success: Using formative assessment to build skills and community in the blended classroom. In S. Koç, X. Liu & P. Wachira (Eds.), Assessment in online and blended learning environments (pp. 235-251), [EBSCOhost eBook Collection]. Retrieved from https://ezproxy.saskpolytech.ca/login?url=https://search.ebscohost.com/login.aspx?direct=true&db=nlebk&AN=971840&site=ehost-live&scope=site

Lockhart, J. (2015). Measuring the application of information literacy skills after completion of a certificate in information literacy. South African Journal of Libraries and Information Science, 81(2), 19-25. doi:10.7553/81-2-1567

This article gives the views of the author and not necessarily the views the Centre for Evidence Based Library and Information Practice or the University Library, University of Saskatchewan.